Time & Space Complexity Study Notes

By BYJU'S Exam Prep

Updated on: September 25th, 2023

I. Asymptotic Notations:

- If we write f(n) = O(g(n)), then there exists a function f(n) such that ∀ n ≥ n0, f(n) ≤ cg (n) with any constant c and a positive integer n0.

OR

- f(n) = O(g(n)) means we can say g(n) is an asymptotic upper bound for f(n).

Table of content

-

Example-1:

Let f(n) = n2 + n + 5. Then f(n) is O(n2), and f(n) is O(n3), but f(n) is not O(n).

-

Example-2:

Let f(n) = 3n . Then f(n) is O(4n) but not f(n) is not O(2n)

Note: O(1) refers to constant time. O(n) indicates linear time; O(nk) (k fixed) refers to polynomial time; O(log n) is called logarithmic time; O(2n) refers to exponential time, etc.

O(1) < O(log n) < O(n) < O(n2) < O(2n)

Omega (Ω):

- If we write f(n) = Ω(g(n)), then there exists a function f(n) such that ∀ n ≥ n0, f(n) ≥ cg(n) with any constant c and a positive integer n0. Or

- f(n) = Ω(g(n)) means we can say Function g(n) is an asymptotic lower bound for f(n).

- Example-1: Let f (n) = 2n2 + 4n + 10. Then f (n) is Ω(n2)

Theta (θ):

- If we write f(n) = θ(g(n)), then there exists a function f(n) such that ∀ n ≥ n0, c1g(n) ≤ f(n) ≤ c2g(n) with a positive integer n0, any positive constants c1 and c2. Or

- f(n) = θ(g(n)) means we can say Function g(n) is an asymptotically tight bound for f(n).

-

Example-1:

Let f (n) = 2n2 + 4n + 10. Then f (n) is θ(n2)

Small Oh (o):

- If we write f(n) = o(g(n), then there exists a function such that f(n) < c g(n) with any positive constant c and a positive integer n0. Or

- f(n) = o(g(n) means we can say Function g(n) is an asymptotically tight upper bound of f(n).

- Example: n1.99 = o(n2)

Small Omega (ω):

- If we write f(n) = ω(g(n)), then these exists a function such that f(n) > cg(n) with any positive constant c and a positive integer n0. Or

- f(n) = ω(g(n)) means we can say g(n) is asymptotically tight lower bound of f(n).

- Example: n2.00001 = ω(n2) and n2 ≠ ω(n2)

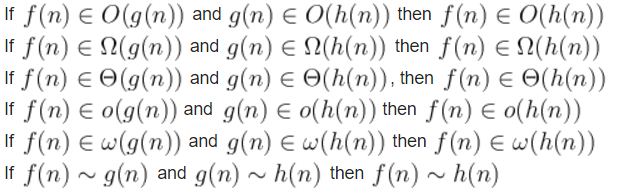

II. The relationship between asymptotic notations :

III. Properties of Asymptotic notations

1.Reflexive Property:

3.Transitive Property

IV. Analysis of Algorithms

The complexity of an algorithm is a function describing the efficiency of the algorithm in terms of the amount of data the algorithm must process. Usually there are natural units for the domain and range of this function.

- Algorithm can be classified by the amount of time they need to complete compared to their input size.

- The analysis of an algorithm focuses on the complexity of algorithm which depends on time or space.

There are two main complexity measures of the efficiency of an algorithm:

1. Time Complexity:

- Worst case time complexity: It is the function defined by the maximum amount of time needed by an algorithm for an input of size n.

- Average case time complexity: The average-case running time of an algorithm is an estimate of the running time for an average input. Computation of average-case running time entails knowing all possible input sequences, the probability distribution of occurrence of these sequences, and the running times for the individual sequences.

- Amortized Running Time: It is the time required to perform a sequence of (related) operations is averaged over all the operations performed. Amortized analysis guarantees the average performance of each operation in the worst case.

- Best case time complexity: It is the minimum amount of time that an algorithm requires for an input of size n.

2. Space Complexity:

V. What is Recurrence Relations

Methods to Solve the Recurrence Relation

There are three methods to solve the recurrence relation given as: Master method , Substitution Method and Recursive Tree method.

1. Master Method:

The master method gives us a quick way to find solutions to recurrence relations of the form T(n) = aT (n/b) + f(n). Where, a and b are constants, a ≥ 1 and b > 1)

- T(n) = aT(n/b) + f (n) where f(n) ∈ Θ(nd), d ≥ 0

- Case-1: If a < bd, T(n) ∈ Θ(nd)

- Case-2: If a = bd, T(n) ∈ Θ(nd log n)

- Case-3: If a > bd, T(n) ∈ Θ(nlog b a )

- Examples:

- T(n) = 4T(n/2) + n ⇒ T(n) ∈ Θ(n2)

- T(n) = 4T(n/2) + n2 ⇒ T(n) ∈ Θ(n2log n)

- T(n) = 4T(n/2) + n3 ⇒ T(n) ∈ Θ(n3)

2. Iterative Substitution Method:

Recurrence equation is substituted itself to find the final generalized form of the recurrence equation.

T(N) = 2T(N/2)+bN

Here T(N/2) is substituted with 2T((N/2)/2)+b(N/2)

T(N) = 4T(N/4)+2bN (Similarly substitute for T(N/4) T(N) = 8T(N/8)+3bN After (i-1) substititions, T(N) = 2iT(N/2i)+ibN

When i=log(N), N/2i=1 and we have the base case.

T(N) = 2log(N)T(N/2log(N))+ibN

T(N) = N T(1) +log(N)bN

T(N) = Nb +log(N)bN

Therefore T(N) is O(Nlog(N))

Using recursion method, n element problem can be further divided into two or more sub problems. In the following

3. Recursion Tree Method:

figure, given problem with n elements is divided into two equal sub problems of size n/2. For each level of the tree the number of elements is N. When the tree is split so evenly the sizes of all the nodes on each level. Maximum depth of tree is logN (number of levels).

Recurrence relation T(n) = 2 T(n/2) + O(n) = Θ(Nlog(N)).

Example-1: Find the Time complexity for T(N) = 4T(N/2)+N.

Let T(N) = aT(N/b)+f(N).

Then a=4, b=2, and f(N)=N

Nlogba = Nlog24 = N2.

f(N) is smaller than Nlogba

Using case 1 of master theorem with ε=1.

T(N) = Θ(Nlogba) = Θ(N2).

Example-2: Find the Time complexity for T(N) = 2T(N/2) = Nlog(N)

a=2, b=2, and f(N)=Nlog(N)

Using case 2 of the master theorem with k=1.

T(N) = Θ(N(logN)2).

Example-3: Find the Time complexity for T(N) = T(N/4) + 2N

a=1, b=4, and f(N)=2N

logba = log41 = 0

Nlogb = N0 = 1

Using case 3: f(N) is Ω(N0+ε) for &epsilon=1 and af(N/b) = (1)2(N/4) = N/2 = (1/4)f(N)

Therefore T(N) = Θ(N)

Example-4: Find the Time complexity for T(N) = 9T(N/3) + N2.5

a=9, b=3, f(N)=N2.5

logba = 2, so Nlogb = N2

Using case 3 since f(N) is Ω(N0+ε), epsilon=.5 and af(N/b)=9f(N/3)= 9(N/3)2.5=(1/3)0.5f(n).

Therefore T(n) is O(N2.5)

Candidates can also practice 110+ Mock tests for exams like GATE, NIELIT with BYJU’S Exam Prep Test Series check the following link:

Click Here to Avail GATE CSE Test Series!

Thanks