Floating Point Representation – Details, Types & Needs

By BYJU'S Exam Prep

Updated on: September 25th, 2023

In computing, floating point representation (FP) is the representation that uses a formulaic representation of real numbers as an approximation to enable a trade-off between range and accuracy. As a result, the floating point representation is frequently utilized in systems with very small and extremely large real numbers that demand quick processing speeds.

Download Operating System Formula Notes PDF

The Institute of Electrical and Electronics Engineers (IEEE) produced standards for the encoding of floating point representation in 32 and 64 bits, known as IEEE 754 standards. The floating point representation has three fields:

- Sign

- Significant digits and

- Exponents

Table of content

What is Floating Point Representation?

Binary numbers can also be expressed in exponential form. The representation of binary integers in exponential form is known as floating point representation. The floating point representation divides the number into two parts: the left side is a signed, fixed-point integer known as a mantissa, and the right side is the exponent.

Floating point representation can also have a sign, with 0 denoting a positive value and 1 denoting a negative value. The IEEE (Institute of Electrical and Electronics Engineers) has developed a floating point representation standard.

Floating Point Representation Types

The IEEE specifies two types of formats in floating point representation that are:

- Single precision(32-bit)

- Double precision(64-bit)

Single Precision Floating Point Representation

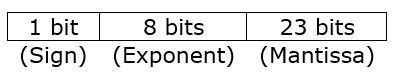

The single-precision floating-point representation (also known as FP32 or float32) is a computer number format that uses a floating radix point to express a wide dynamic range of numeric values. The IEEE 754 standard defines a binary32 as having the following characteristics:

- 1 bit for sign

- 8-bit for exponent

- The precision of significand: 24 bits (23 explicitly stored)

The structure of single precision floating point representation is as follows:

Exponent calculation

In the IEEE 754 standard, the single-precision floating-point representation, the exponent is encoded using an offset-binary encoding, with the zero offset being 127; this is known as exponent bias.

Emin = 01H – 7FH = −126

Emax = FEH – 7FH = 127

Exponent bias = 7FH = 127

Thus, the offset of 127 must be removed from the recorded exponent to obtain the real exponent as described by the offset-binary representation.

Double Precision Floating Point Representation

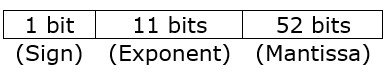

The double precision floating point representation (also known as FP64 or float64) is a computer number format that uses a floating radix point to express a wide dynamic range of numeric values. The IEEE 754 standard defines a binary64 as having the following characteristics:

- 1 bit for sign

- 11-bit for exponent

- The precision of significand: 53 bits (52 explicitly stored)

The structure of double precision floating point representation is as follows:

Need for Floating Point Representation

A fixed point representation will not be sufficient when representing extremely small or extremely big numbers. The precision will be lost. As a result, you must examine floating point representations, in which the binary point is believed to be floating.

Consider the decimal value 12.34 * 107, which may alternatively be written as 0.1234 * 109, where 0.1234 is the fixed-point mantissa. The other portion is the exponent value, and it shows that the actual position of the binary point in the fraction is 9 places to the right (left) of the specified binary point.

A floating point representation is so named because the binary point can be shifted to any place and the exponent value can be modified accordingly. By convention, you should use a normalized form, with the floating point to the right of the first nonzero (significant) digit.