Cache Memory Study Notes for GATE CSE

By BYJU'S Exam Prep

Updated on: September 25th, 2023

Cache Memory Study Notes for GATE CSE– Cache Memory is particularly used to accelerate and integrate with high-speed CPUs. Cache Memory is explained deeply below for GATE CSE, NIELIT, etc.

Table of content

Cache Memory

- Cache Memory is the Intermediate memory between CPU and Main Memory, used to hold the image of main memory data, so that CPU can access the main memory data within less amount of time.

- Cache memory is used to speed up the process of accessing the data from the main memory.

- According to the main memory hierarchy design, data will be transferred from the main memory to cache memory in the form of blocks. For this purpose, both the memory are organised in the form of blocks.

- Number of blocks in main memory= Main Memory Size/ Block Size.

- Number of blocks/ lines in Cache Memory= Cache Memory Size/ Block Size

Mapping of Cache Memory

The transformation of data from main memory to cache memory is referred to as a mapping process. There are three types of mapping procedures considered

- Direct mapping

- Associative mapping

- Set associative mapping

Direct Mapping:

- The location of the memory block in the cache (i.e. the block number in the cache) is the memory block number modulo the number of blocks in the cache.

- The CPU address is divided into three fields (Tag, Line Offset, and Word Offset).

| Tag | Line Offset | Word Offset |

- Lower order line address bits are used to access the directory. Since multiple lines address map into the same location in the cache directory, the upper line address bits (tag bits) must be compared with the directory address to ensure a hit. If a comparison is not valid, the result is a cache miss

Associative Mapping:

- A memory block can be placed in any cache block.

- This memory permits to storage of any word in the cache from the main memory.

- It can be implemented using COMPARATOR with each tag and MUX (multiplexer) all the blocks to select the one that matches.

| Tag | Index |

- The requested address is compared in a directory against all entries in the directory. If the requested address is found (a directory hit), the corresponding location in the cache is fetched and returned to the processor; otherwise, a miss occurs.

Set Associative Mapping:

- A set-associative cache scheme is a combination of fully associative and direct-mapped schemes.

- In direct mapping, each word of cache can store two or more words of memory under the same index address. But in the set-associative method, each data word is stored together with its tag and the number of tag data items in one word of cache is said to form a set.

- The Set No = (memory) Block No MOD No of sets

- The No of set = No of Blocks / NO of ways

- The cache maps each requested address directly to a set (akin to how a direct-mapped cache maps an address to a line), and then it treats the set as a fully associative cache.

| Tag | Set No | Word Offset |

Replacement Algorithms:

- In cache Design, a replacement algorithm is used to replace the pages whenever the cache is full.

- The main page replacement policies are:

- Random: Any page can be replaced randomly. So the concept is used here.

- First In First Out: The block with the longest timestamp is replaced, whenever any request came for the new block.

- Least Recently Used: The block which is not been referred for a very long time will be replaced by the required block.

Updating Policies

There are two updating policies for the cache system:

- Write through policy.

- Write back policy.

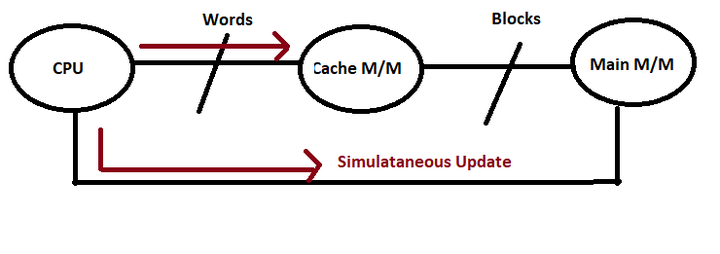

Write Through Policy:

- In this updating technique, the CPU performs write operation simultaneously on cache memory as well as main memory.

- Coherence is not present in the system.

- Inclusion is always a success.

Average Access time=(Frequencyread* Tavgread) + (Frequencywrite * Tavgwrite)

Average time to read the data, Tavgread = HrTc + (1-Hr)(Tm + Tc)

Average time to write the data, Tavgwrite= HwTw + (1-Hw)*(Tm + Tw)

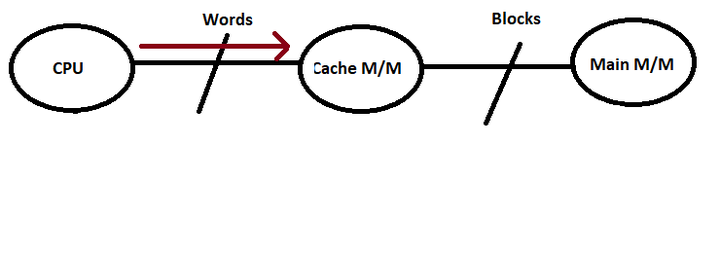

Write Back Policy:

- In this organization, updation is carried out in the cache memory. No simultaneous updates are made to the main memory.

- Coherence is present in the memory.

- Inclusion fails here.

- This design maintains a 1- bit extra space in every line to indicate whether data is modified or not.

Average Access time=(Frequencyread* Tavgread) + (Frequencywrite * Tavgwrite)

Average time to read the data, Tavgread = HrTc + (1-Hr)[% of dirty bits(Tm + Tm+ Tc) + % of clean bits( Tm+ Tc)]

Average time to write the data, Tavgwrite= HwTc + (1-Hw)* [% of dirty bits(Tm + Tm+ Tc) + % of clean bits( Tm+ Tc)]

Types of Cache Misses

- Compulsory Miss: This miss will occur when a block is referred for the very first time. These misses can be minimized by incremented the block size.

- Capacity Miss: This miss will occur when the cache is full. These can be minimized by increasing the cache size.

- Conflict Miss/ Inference Miss: These misses will occur when two many cache blocks are mapped into one cache line or cache set. These can be minimized by doubling associativity.

You can follow the detailed champion study plan for GATE CS 2021 from the following link:

Detailed GATE CSE 2021 Champion Study Plan

Candidates can also practice 110+ Mock tests for exams like GATE, NIELIT with BYJU’S Exam Prep Test Series check the following link:

Click Here to Avail GATE CSE Test Series! (100+ Mock Tests)

Get unlimited access to 21+ structured Live Courses all 112+ mock tests with Online Classroom Program for GATE CS & PSU Exams:

Click here to avail Online Classroom Program for Computer Science Engineering

|

Related Links |

|

| GATE CSE Exam Analysis 2022 | |

| BARC CS Syllabus | |

| BARC CS Exam Analysis | |

Thanks

Prep Smart. Score Better!